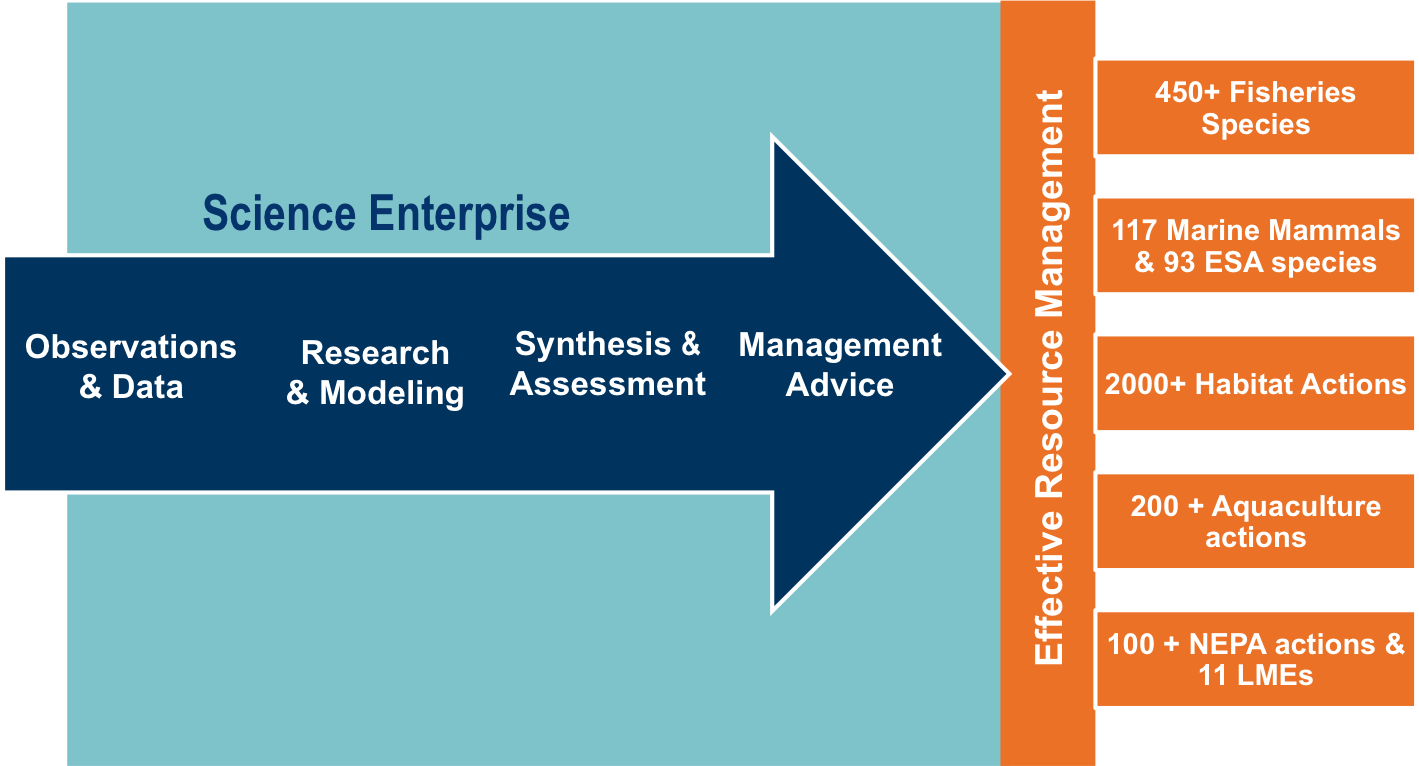

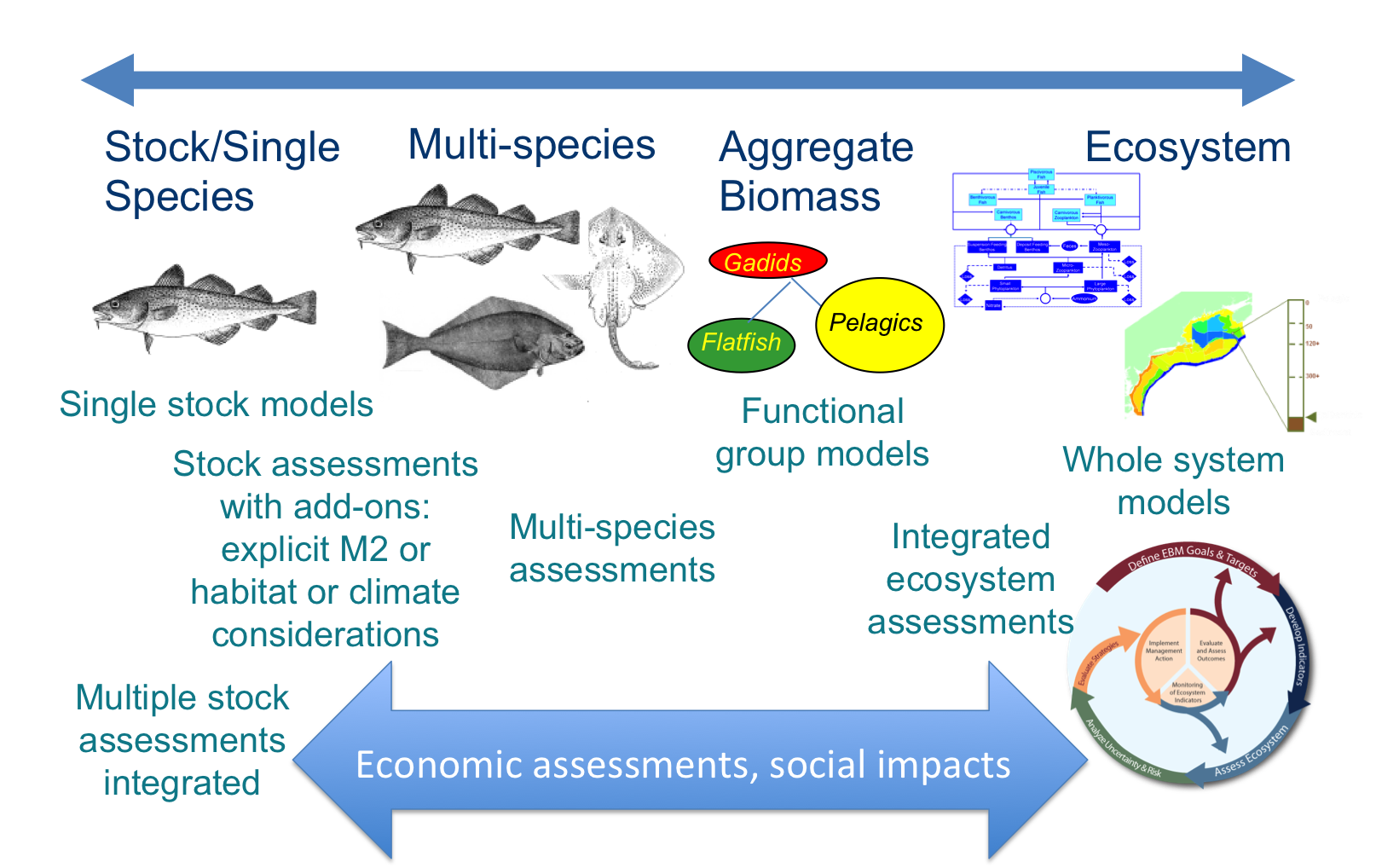

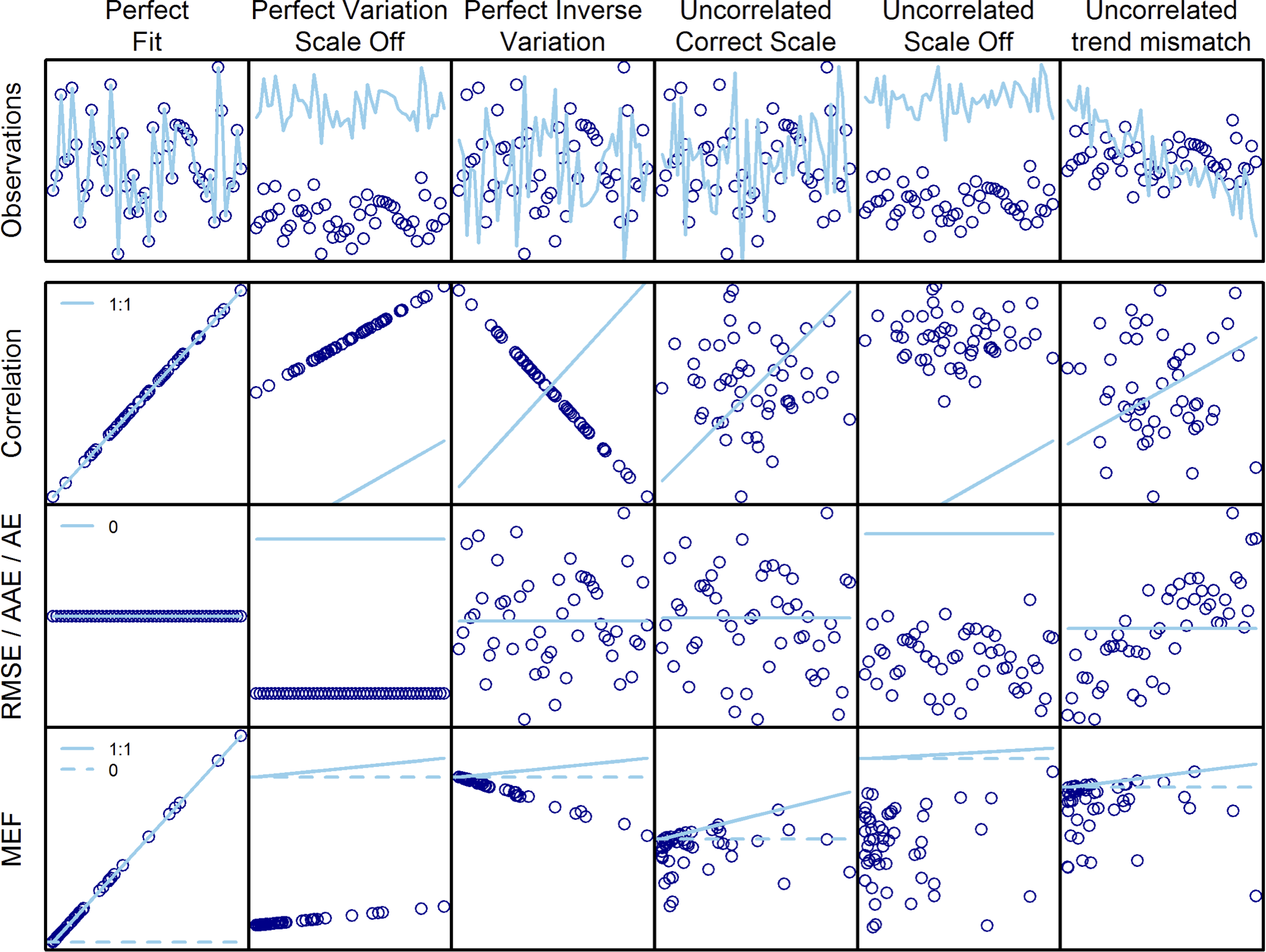

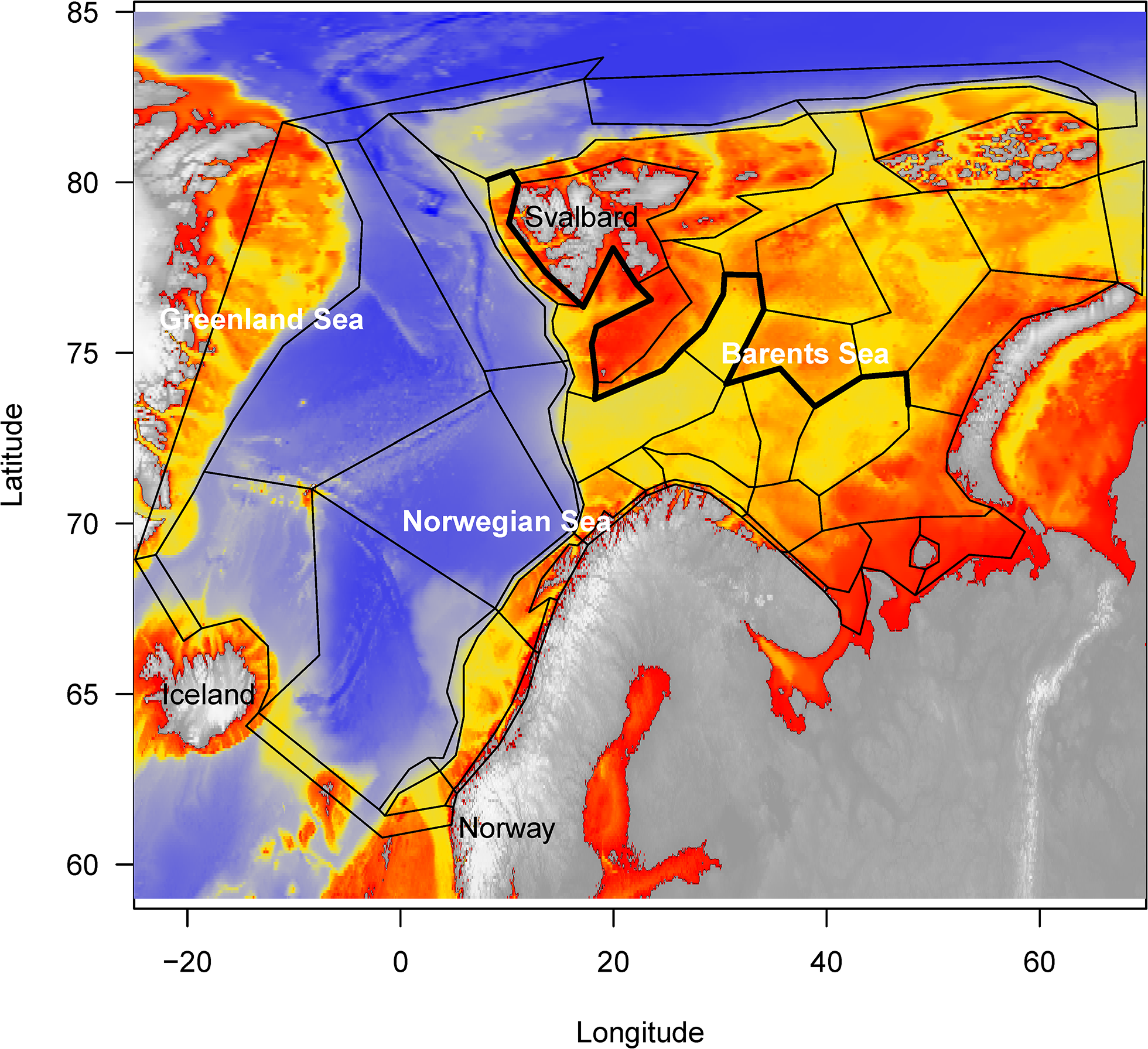

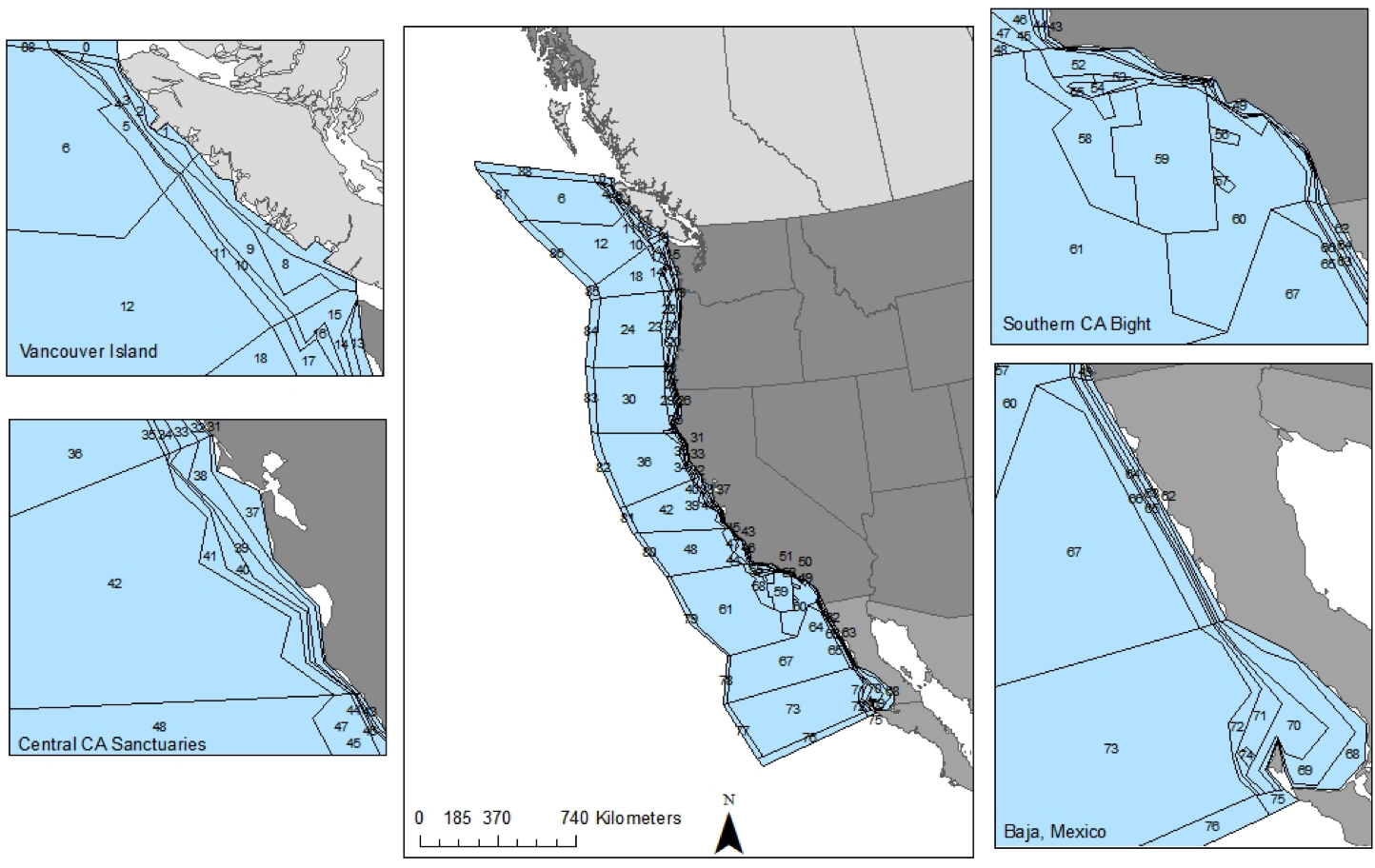

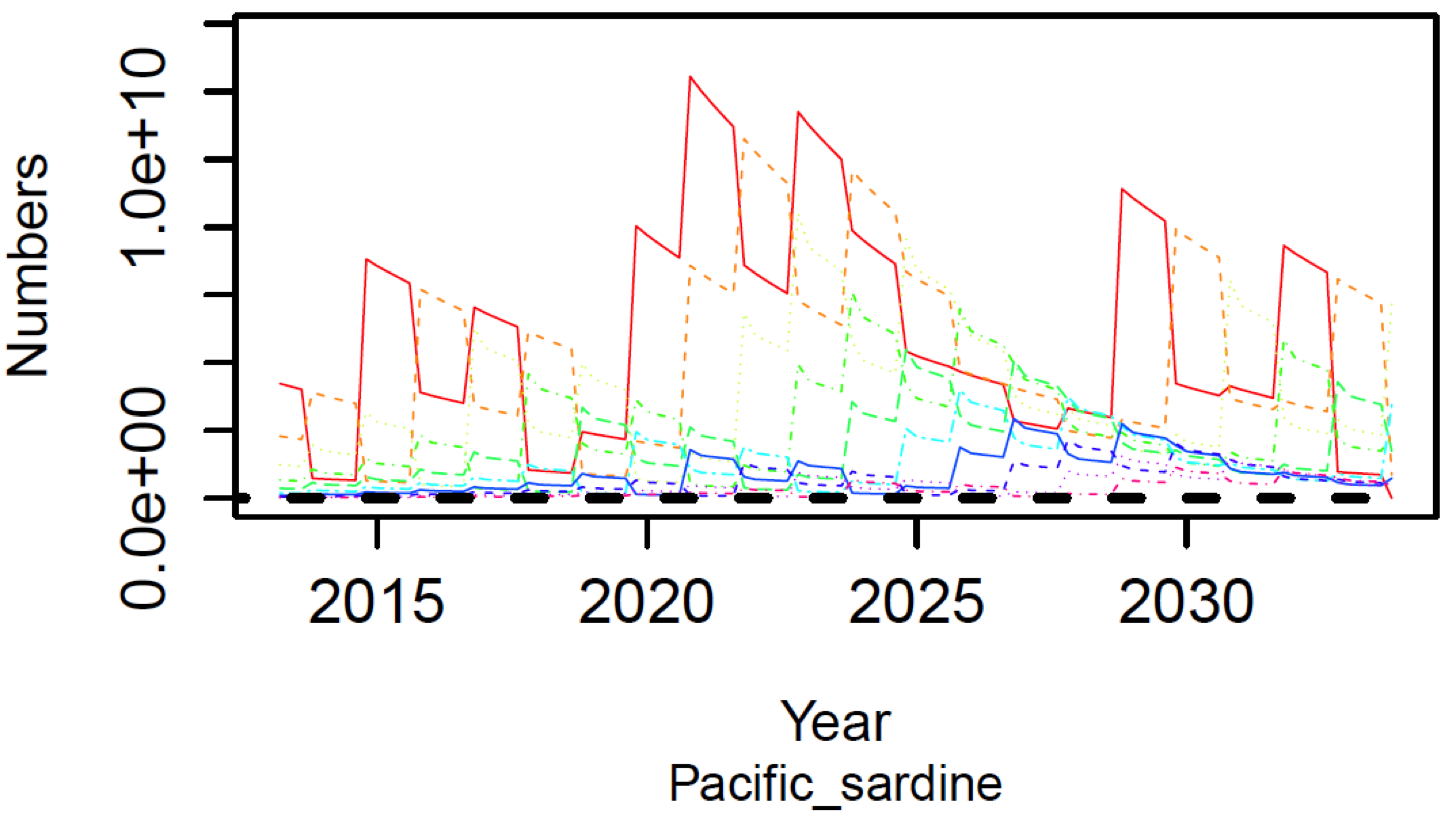

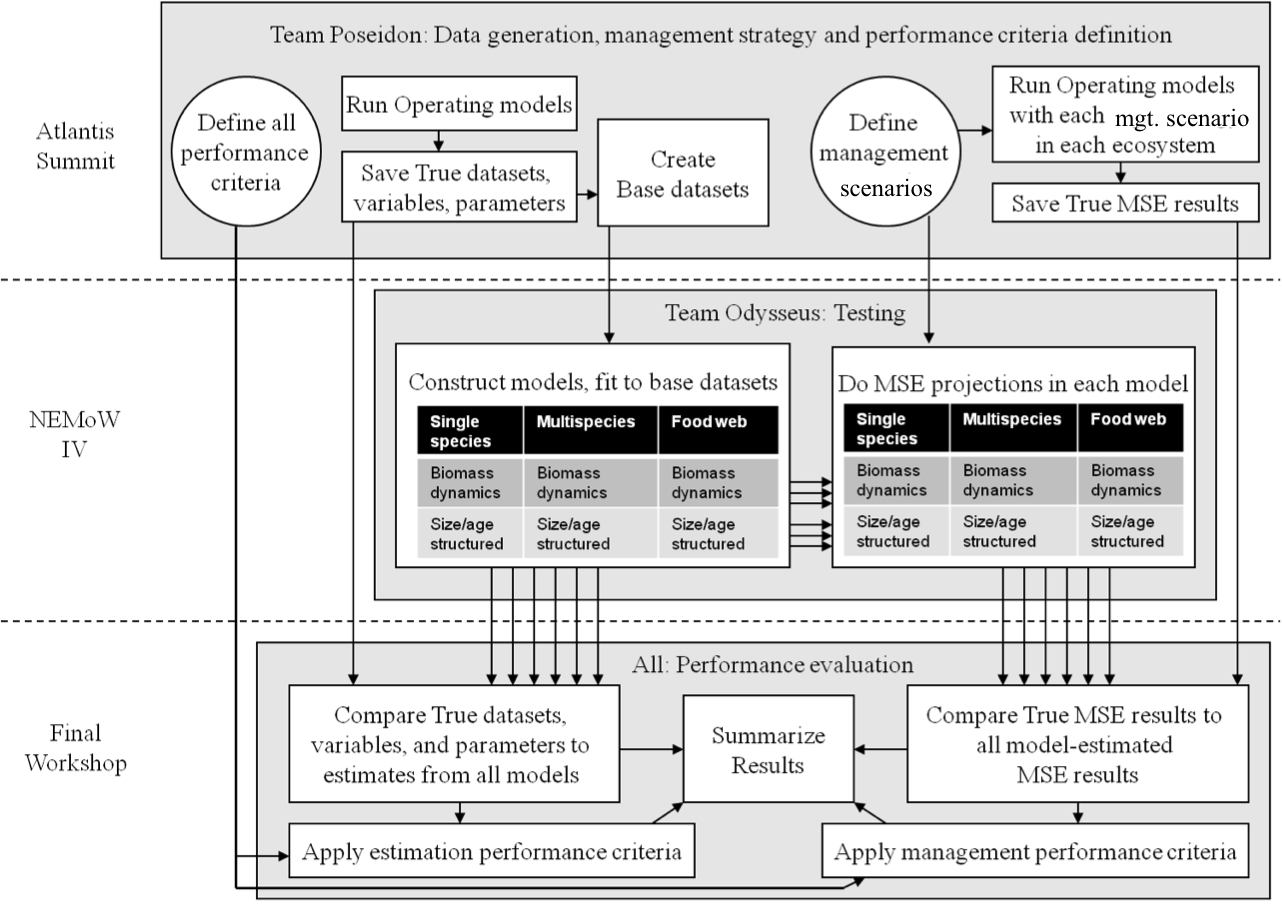

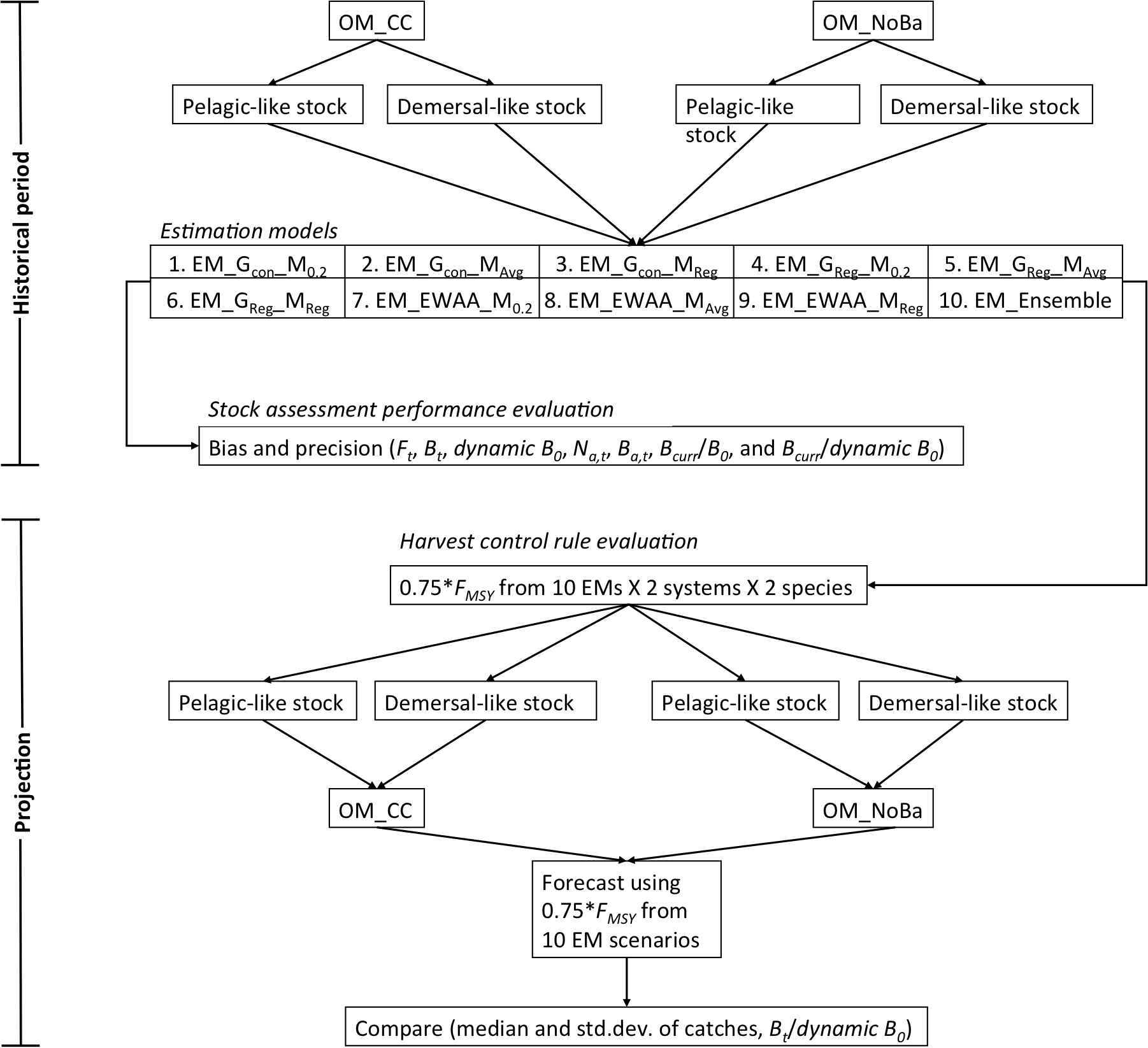

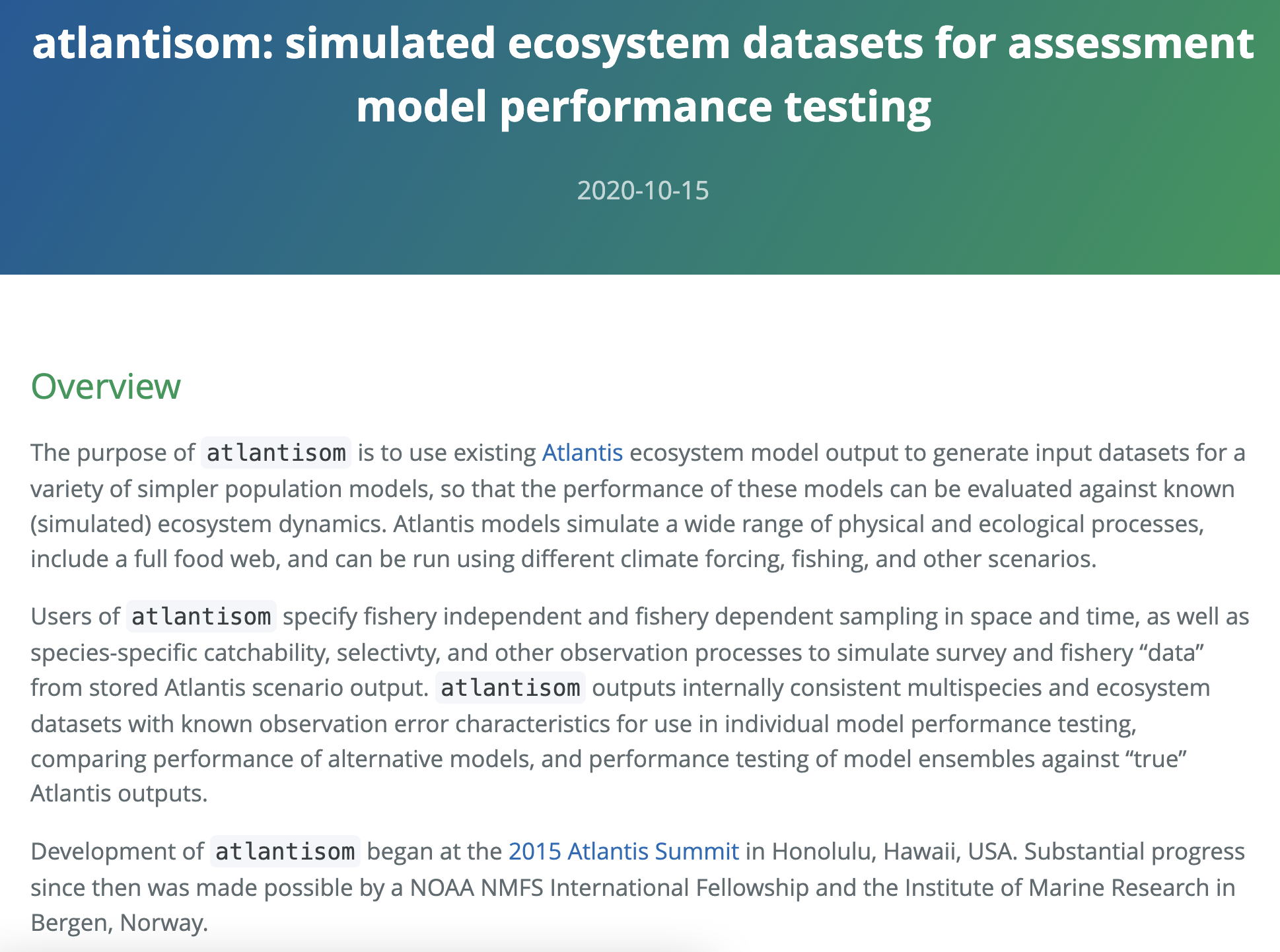

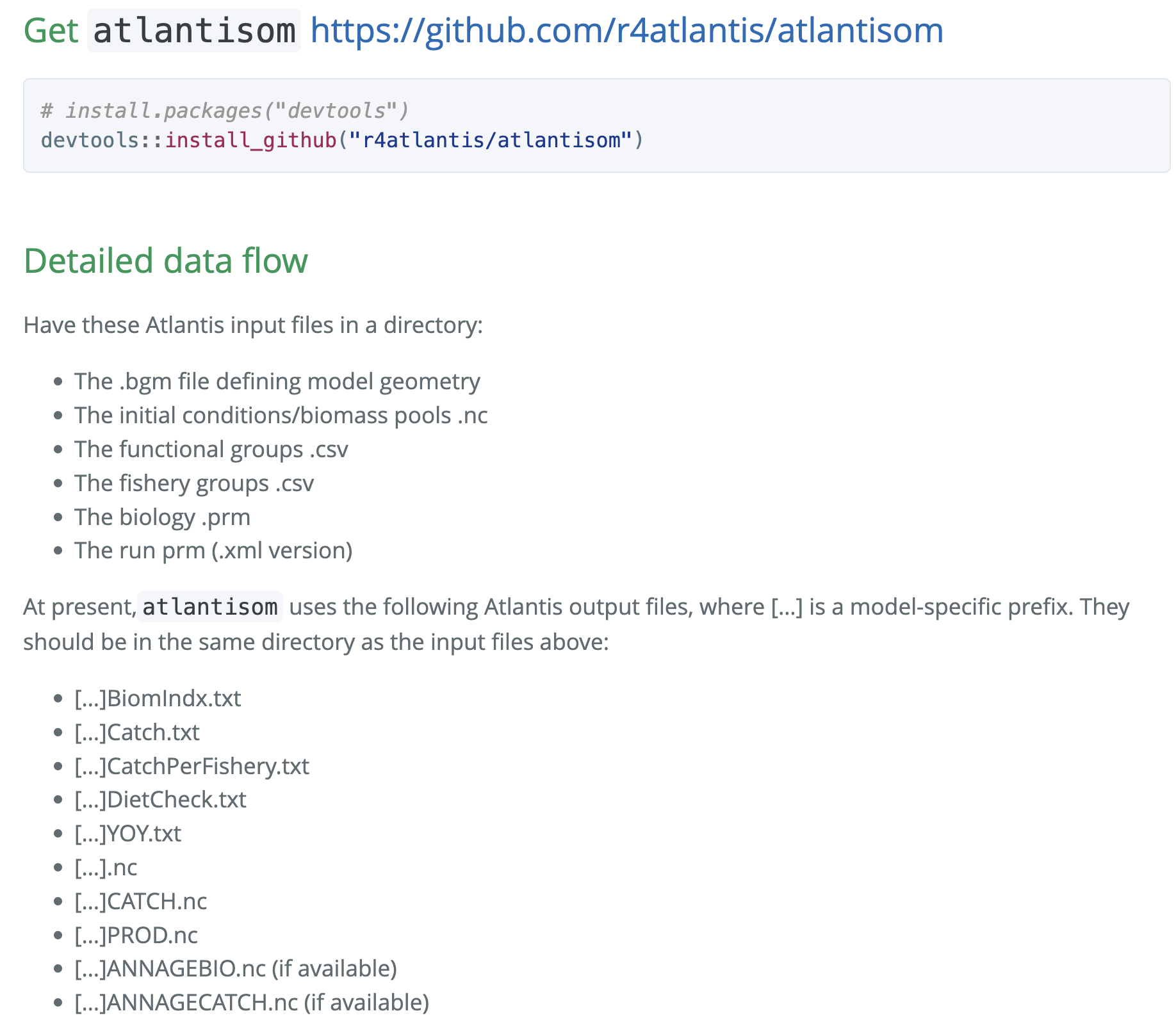

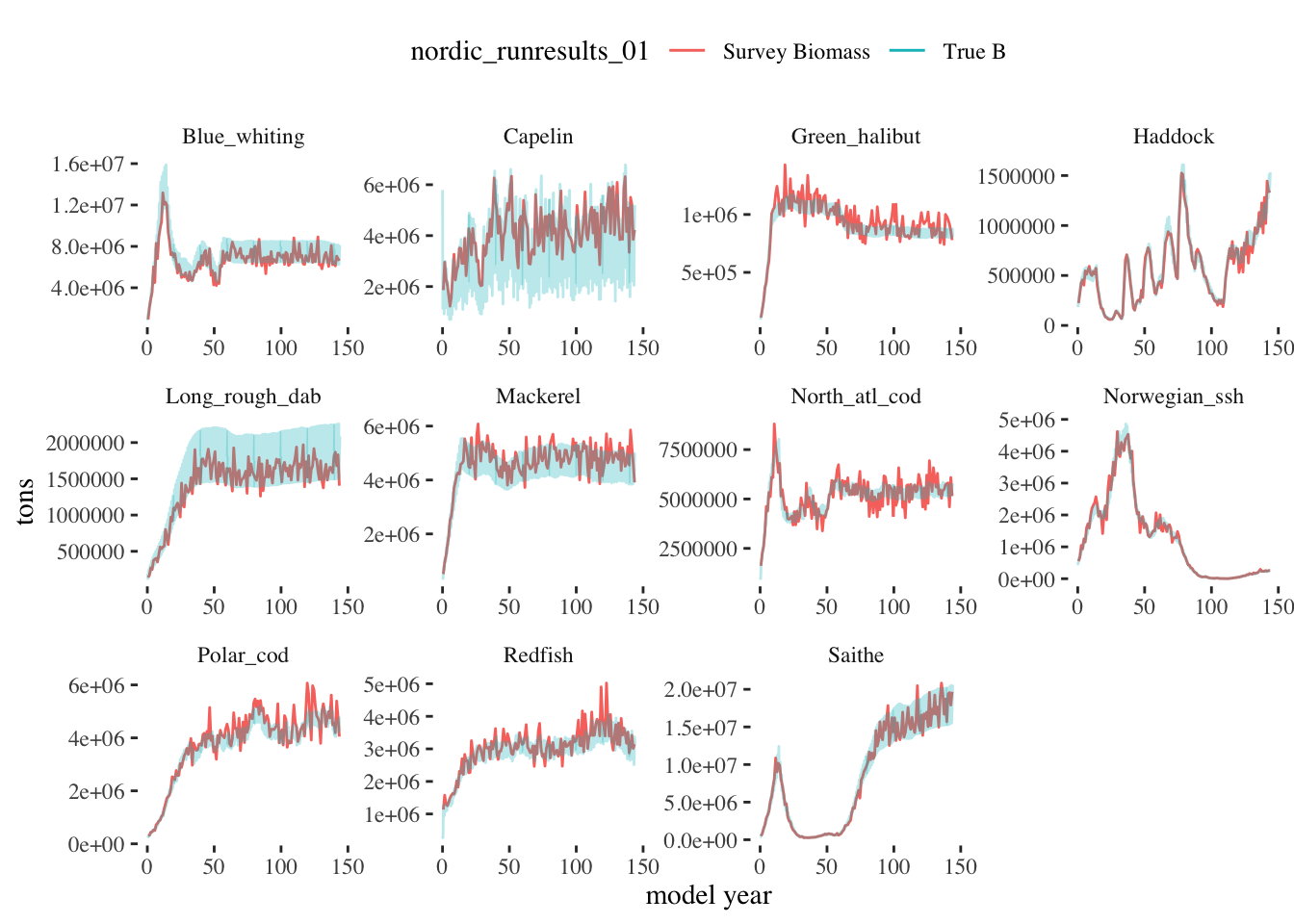

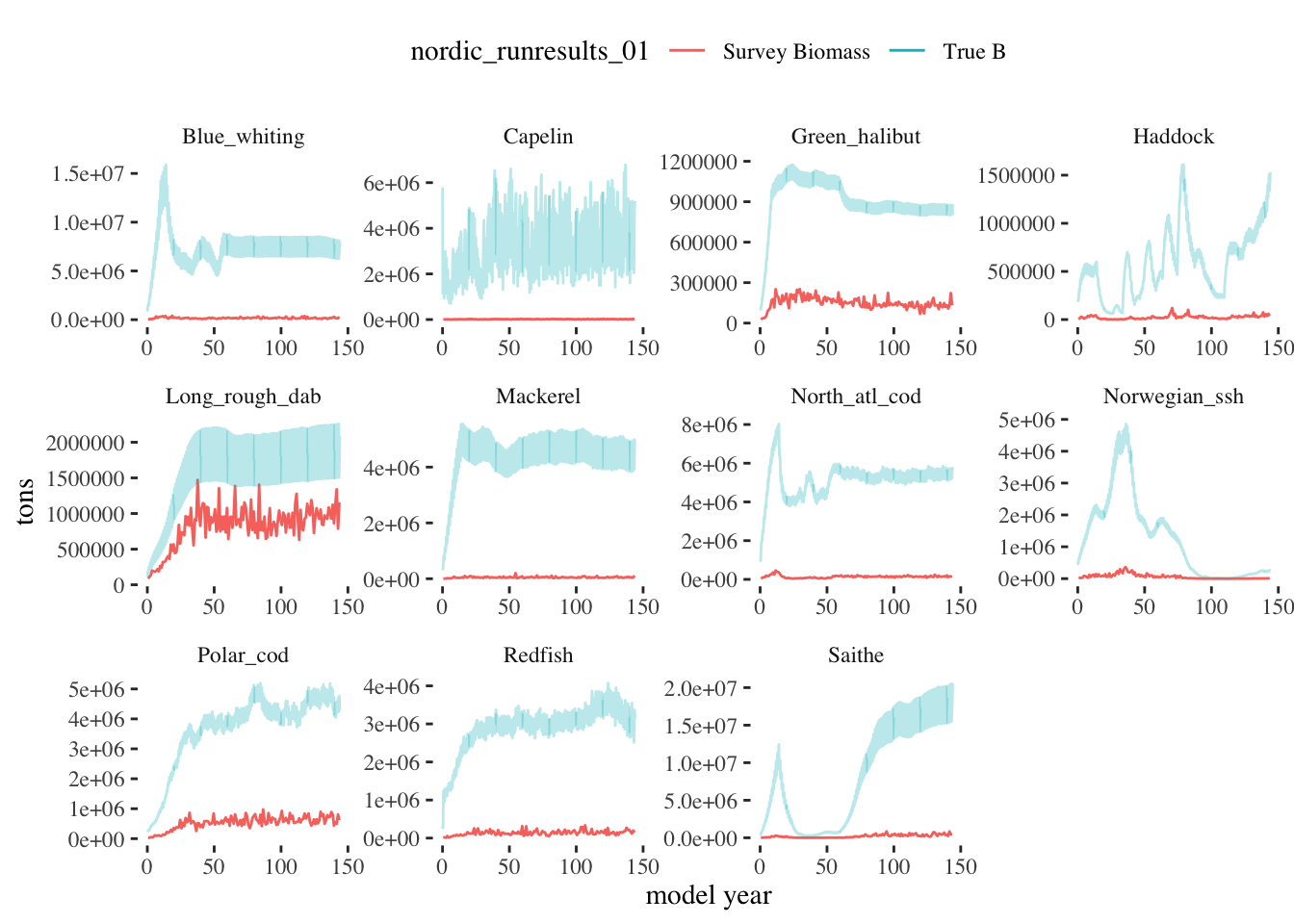

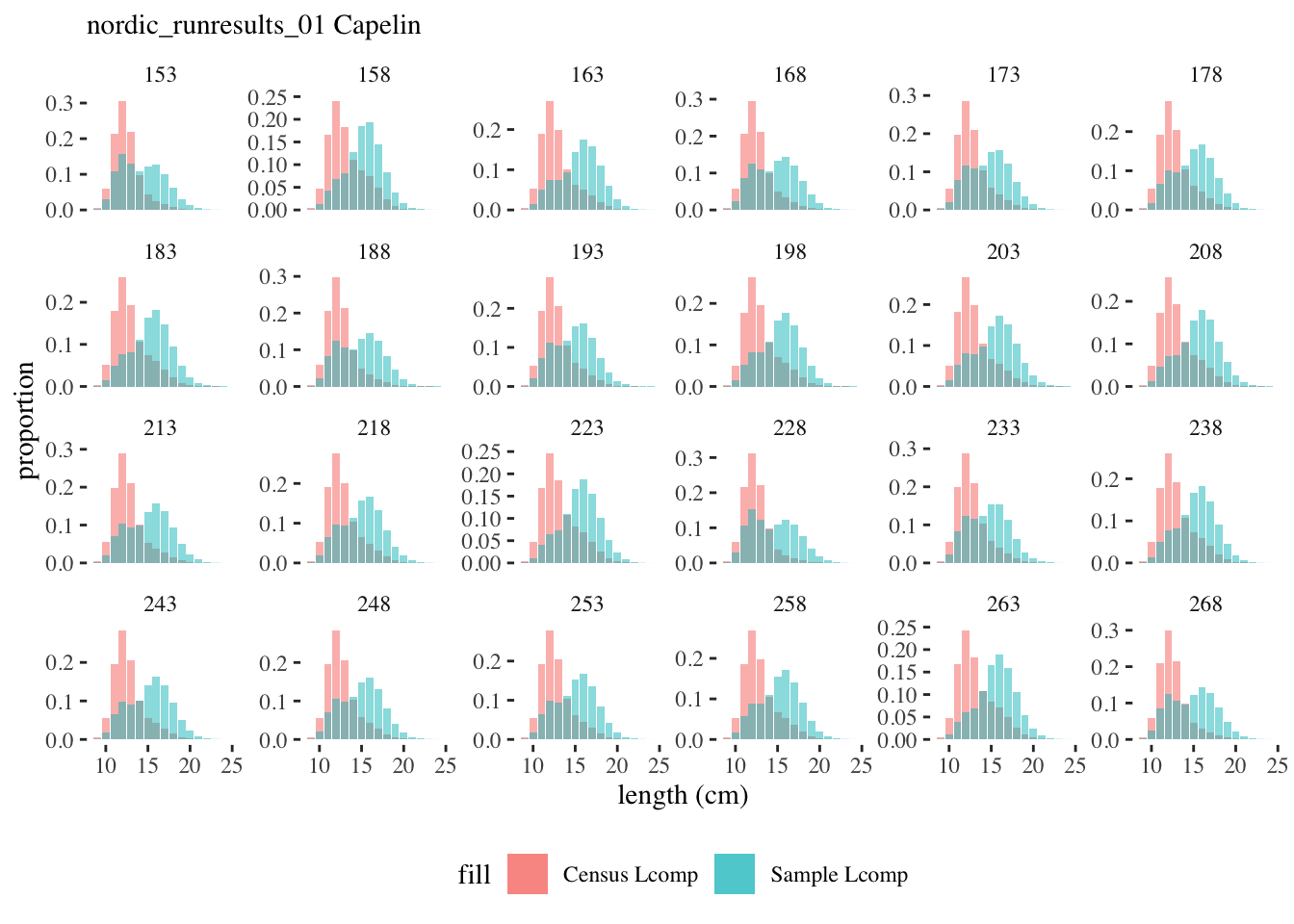

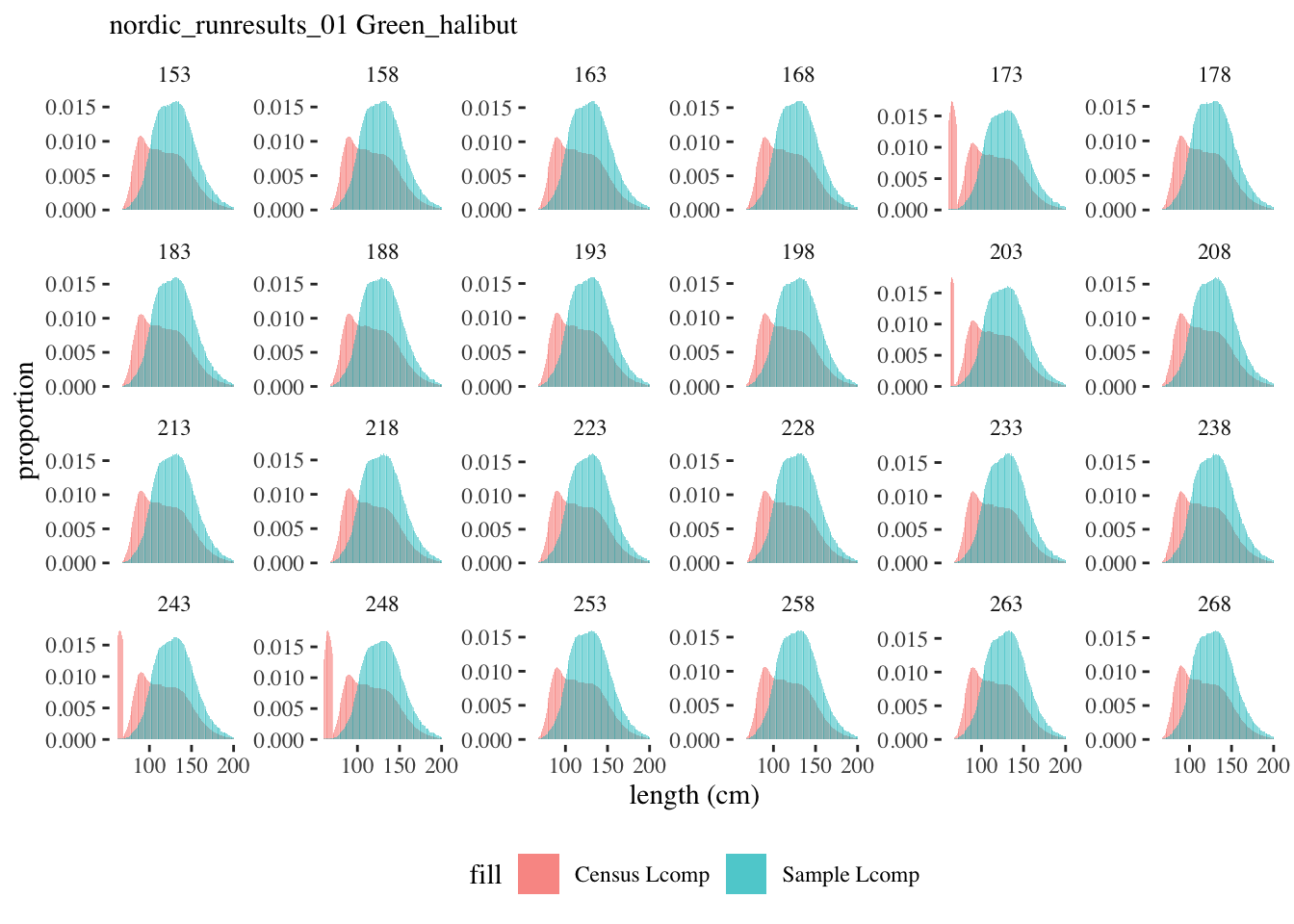

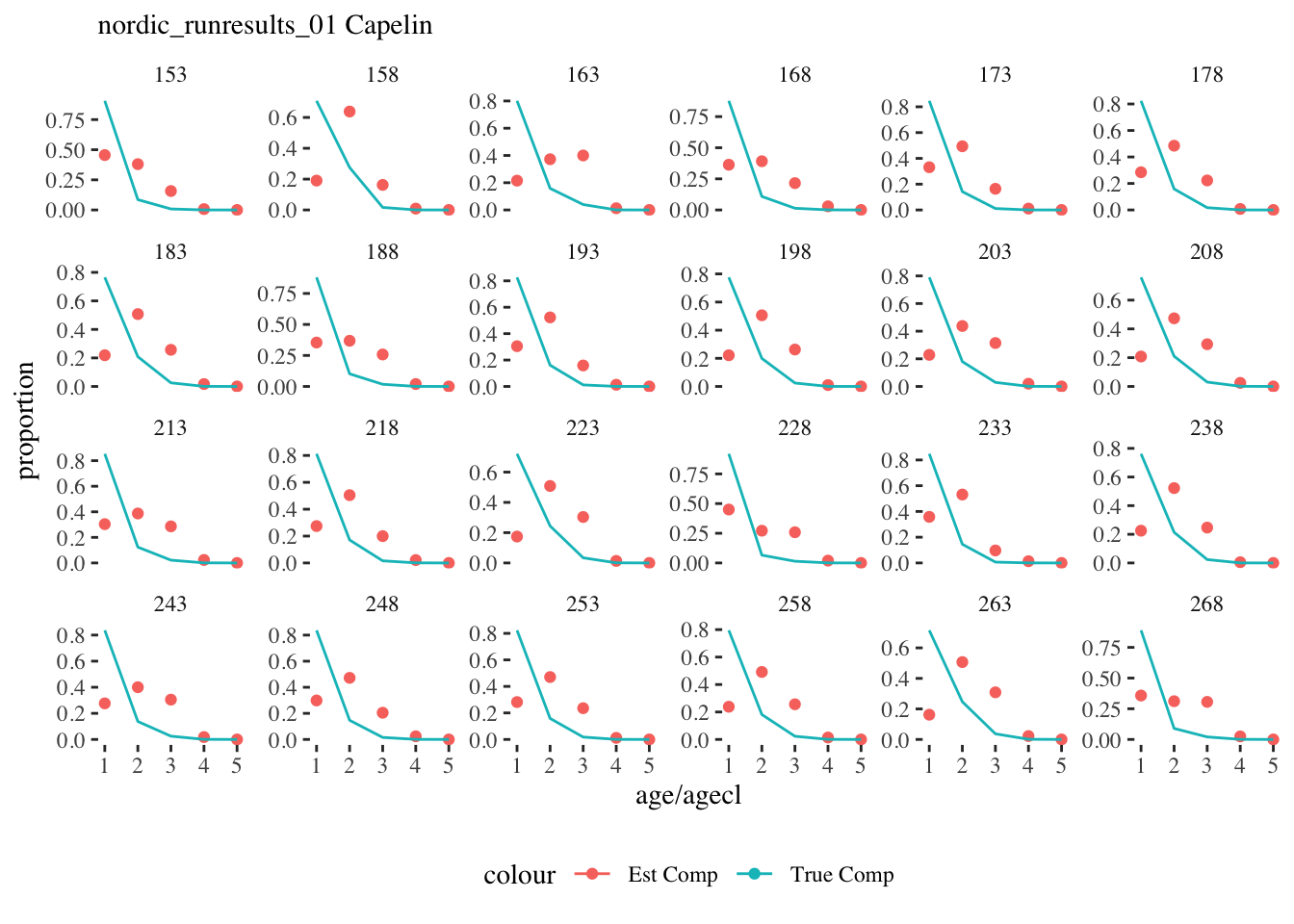

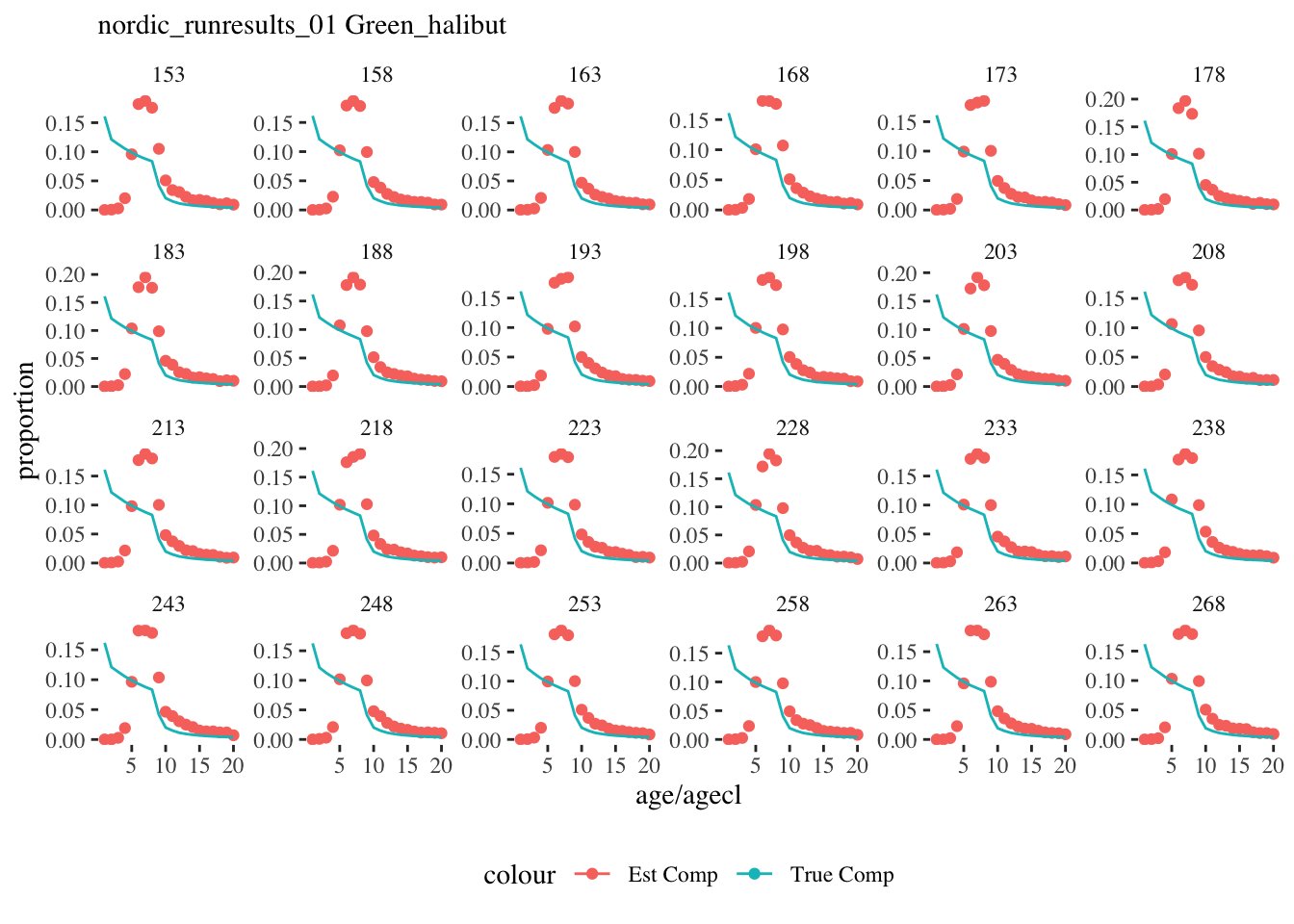

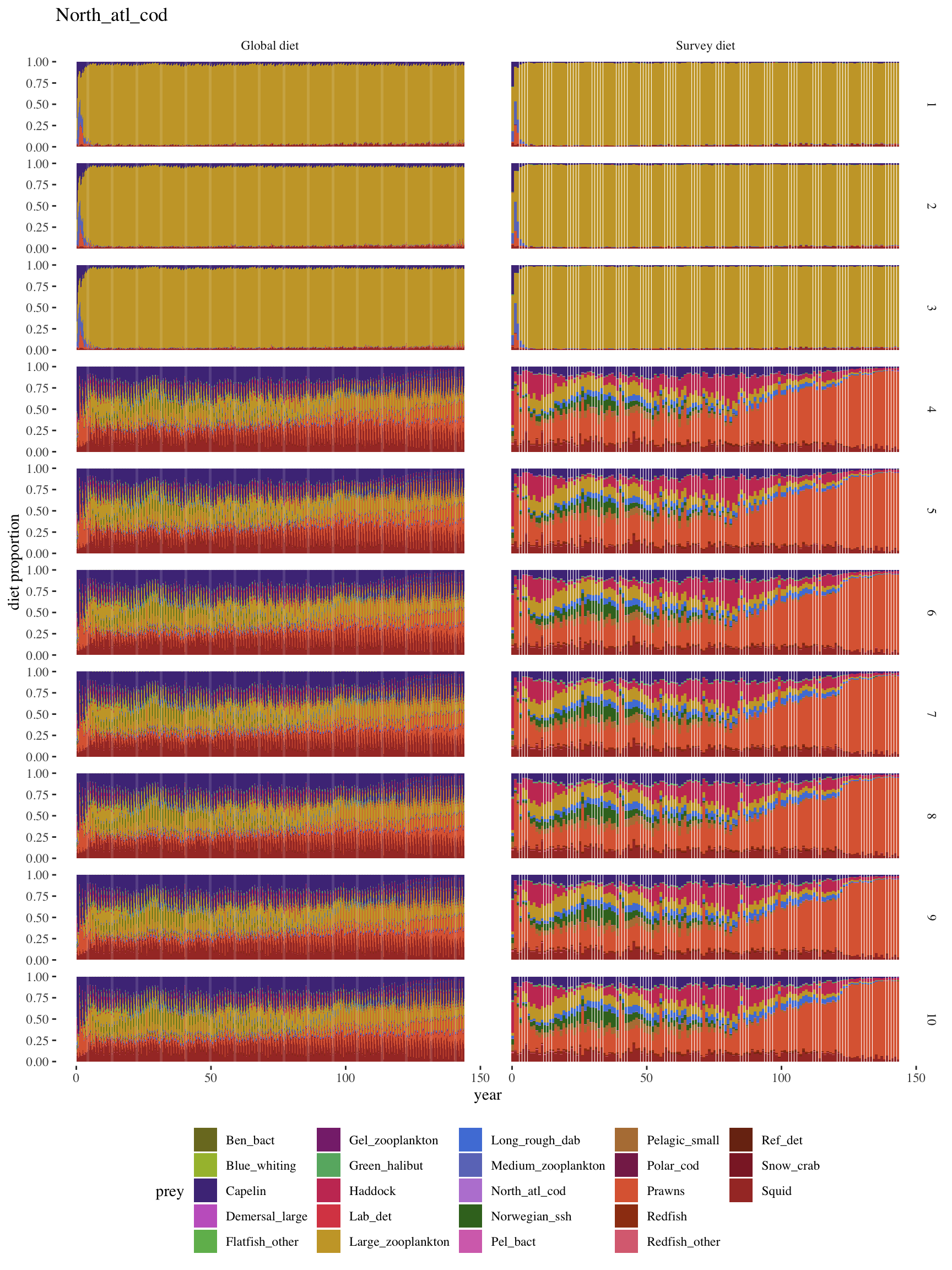

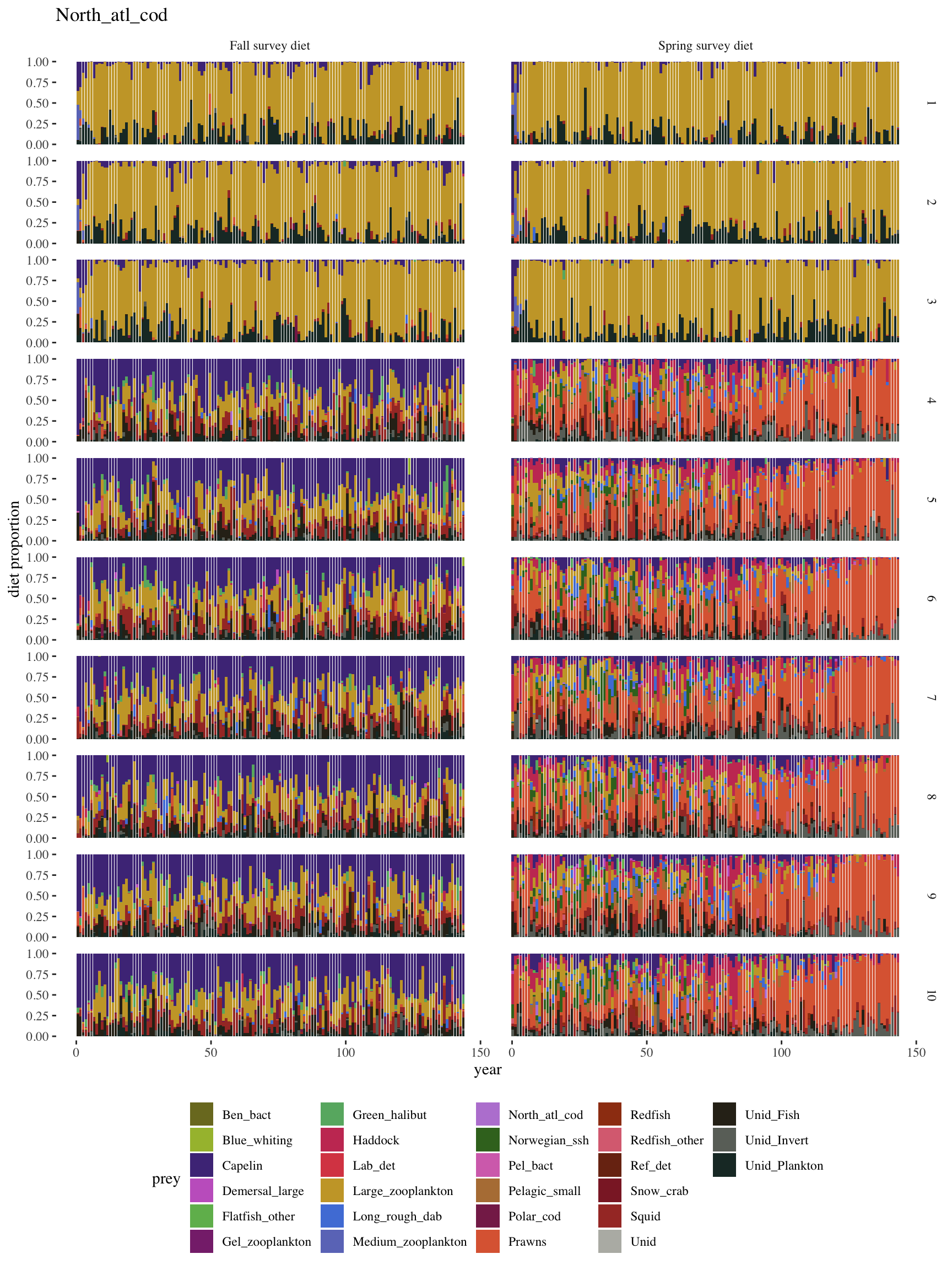

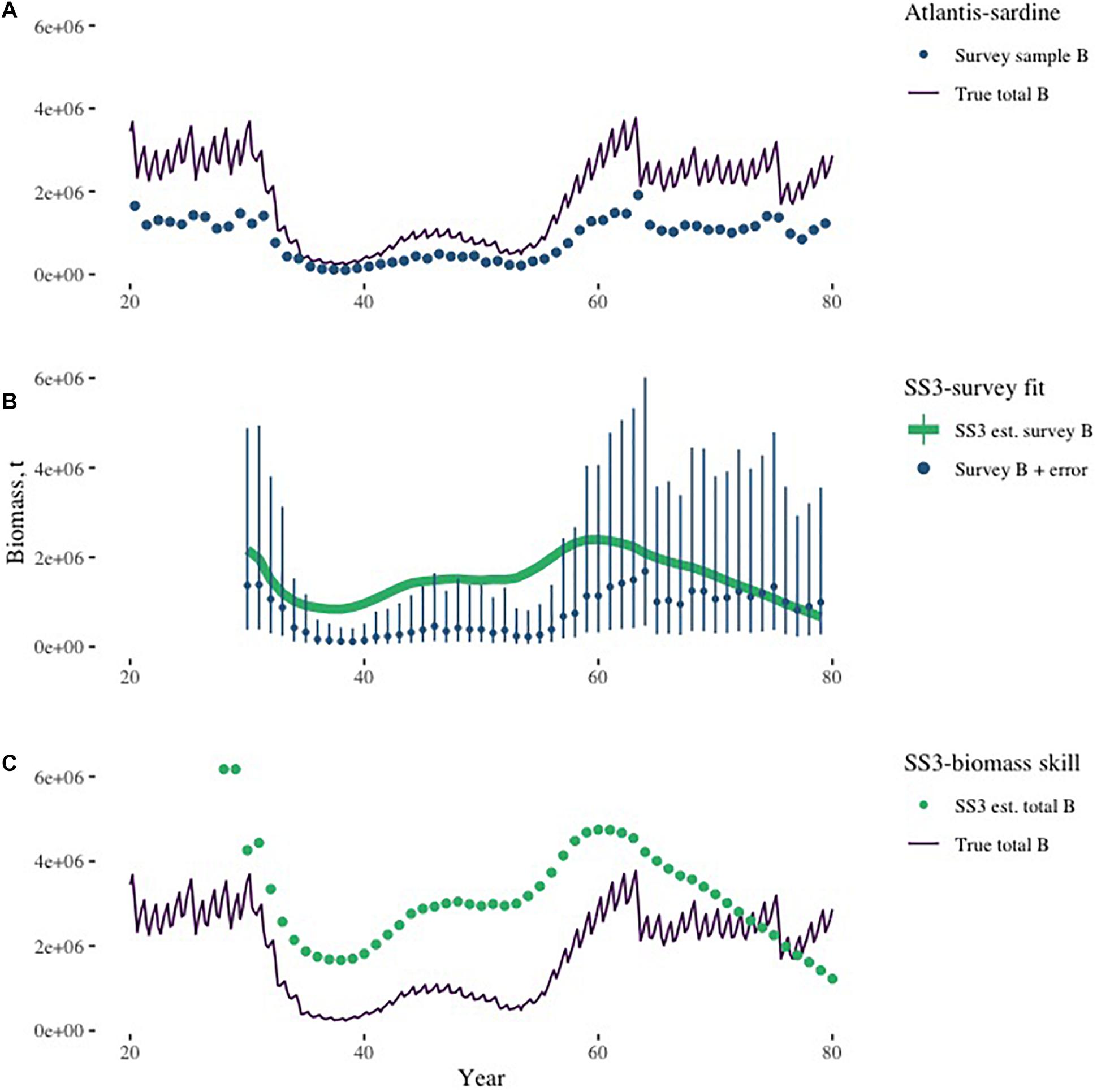

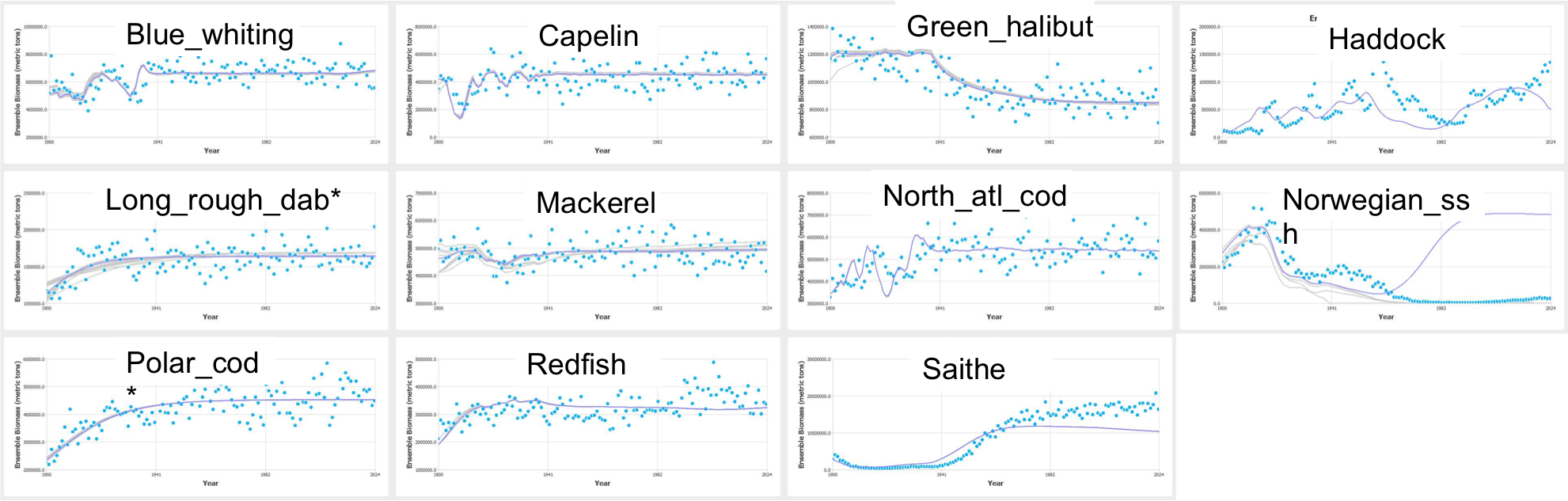

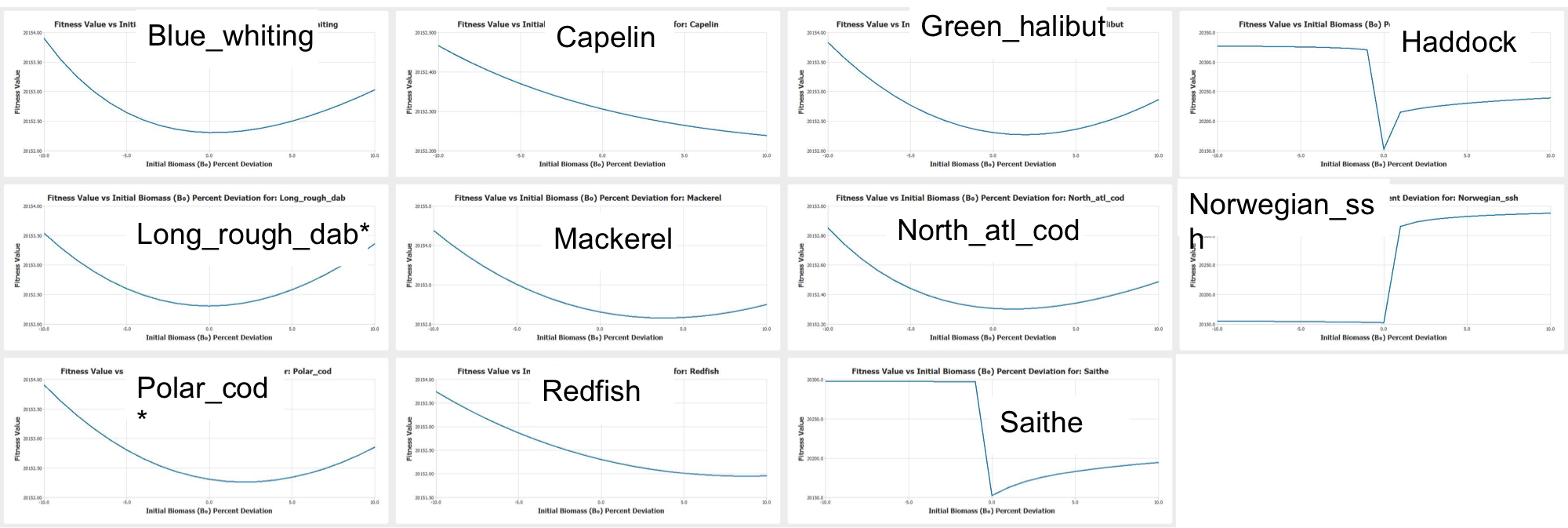

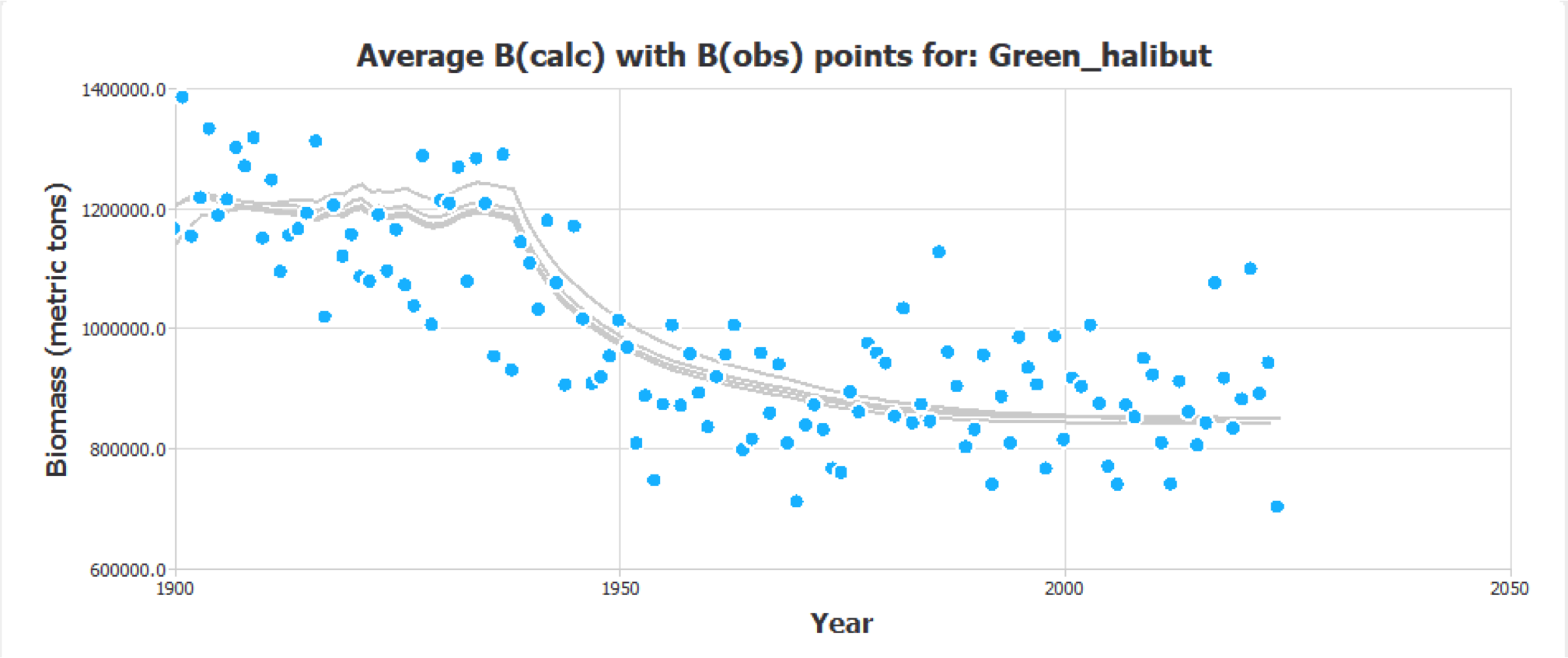

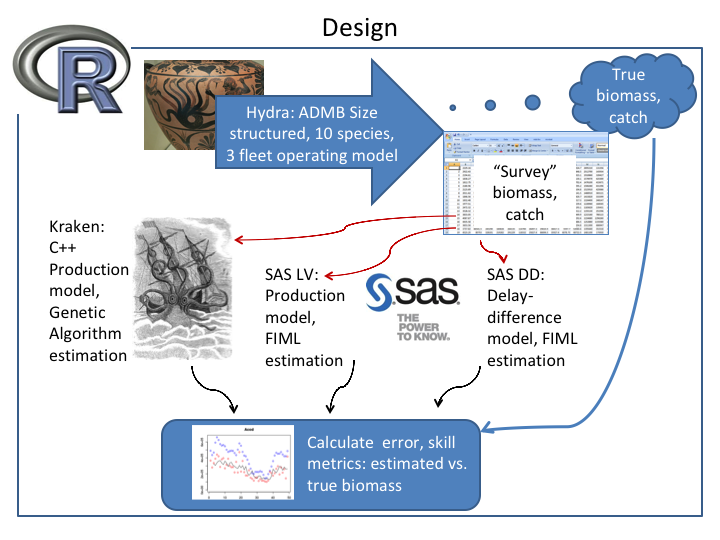

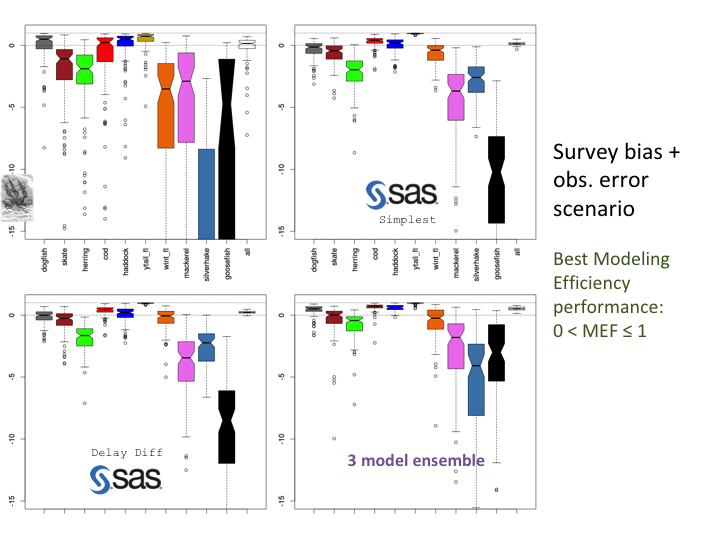

class: right, middle, my-title, title-slide # Challenging assessment models<br /> with realistic complexity ## Atlantis Summit 2022 Day 3 Keynote ### Sarah Gaichas<br /> Northeast Fisheries Science Center<br /> <br /> Many thanks to:<br /> (atlantisom development): Christine Stawitz, Kelli Johnson, Alexander Keth, Allan Hicks, Sean Lucey, Emma Hodgson, Gavin Fay <br /> (Atlantis): Isaac Kaplan, Cecilie Hanson, Beth Fulton <br /> (atlantisom use): Bai Li, Alphonso Perez Rodriguez, Howard Townsend <br /> (skill assessment design): Patrick Lynch --- class: top, left ## Outline .pull-left[ * Management decisions and models * Assessment model testing * What do models need to do? * Challenges with what models need to do * Addressing challenges with Atlantis * Skill assessment with Atlantis "data" 1. R package `atlantisom` 1. Single species assessment 1. Multispecies assessment 1. Other models? Ensembles? ] .pull-right[ * Discussion: what's next + Automation of other model inputs! * Atlantis output verification * New outputs? + Best practices for + dataset creation + parameter estimation  ] --- background-image: url("EDAB_images/noaafisheries.png") background-size: 900px background-position: bottom center # We do a lot of assessments .center[  <!----> ] --- background-image: url("EDAB_images/ecomods.png") background-size: 900px background-position: bottom center # With a wide range of models .center[ <!----> ] --- background-image: url("EDAB_images/modeling_study.png") background-size: 500px background-position: right bottom # How do we know they are right? * Fits to historical data (hindcast) * Influence of data over time (retrospective diagnostics) * Keep as simple and focused as possible * Simulation testing # But, what if ## data are noisy? ## we need to model complex interactions? ## conditions change over time? .footnote[ https://xkcd.com/2323/ ] --- background-image: url("EDAB_images/forageschool.png") background-size: 450px background-position: right bottom ## Fisheries: what do we need to know? What do models need to estimate? **How many fish can be caught sustainably?** .pull-left[ * How many are there right now? * How many were there historically? * How productive are they (growth, reproduction)? * How many are caught right now? * How many were caught historically? ] .pull-right[ * Current biomass overall and at age/size * Reconstruct past population dynamics * Individual and population growth * How many should be caught next year? * How certain are we? ] -- ** "New" questions and challenges, broader management objectives** .bluetext[ * <span style="color:blue">What supports their productivity?</span> * <span style="color:blue">What does their productivity support, besides fishing?</span> * <span style="color:blue">How do they interact with other fish, fisheries, marine animals?</span> * <span style="color:blue">How do environmental changes affect them?</span> * <span style="color:blue">What is their ecological, economic, and social value to people?</span> ] --- # Risks to meeting fishery management objectives .center[   ] .center[      ] --- background-image: url("EDAB_images/seasonal-sst-anom-gridded-2021.png") background-size: 600px background-position: right top ## Risks: Climate change indicators in US Mid-Atlantic .pull-left[ Indicators: ocean currents, bottom and surface temperature, marine heatwaves <img src="20220504_AtlantisSummitKeynote_Gaichas_files/figure-html/unnamed-chunk-2-1.png" width="288" style="display: block; margin: auto;" /> <img src="20220504_AtlantisSummitKeynote_Gaichas_files/figure-html/unnamed-chunk-3-1.png" width="288" style="display: block; margin: auto;" /> <img src="20220504_AtlantisSummitKeynote_Gaichas_files/figure-html/unnamed-chunk-4-1.png" width="504" style="display: block; margin: auto;" /> ] .pull-right[ <img src="20220504_AtlantisSummitKeynote_Gaichas_files/figure-html/unnamed-chunk-5-1.png" width="360" style="display: block; margin: auto;" /> ] ??? A marine heatwave is a warming event that lasts for five or more days with sea surface temperatures above the 90th percentile of the historical daily climatology (1982-2011). --- ## The stock assessment community is well aware of this .pull-left[ - Changing climate and ocean conditions → Shifting species distributions, changing productivity - Needs: - Improve our ability to project global change impacts in the ecosystems around the world - Test the performance of stock assessments to these impacts - *Design assessment methods that perform well despite these impacts* ] .pull-right[ *Climate-Ready Management, [Karp et al 2019]()*  ] ??? --- ## Skill assessment background (see [Journal of Marine Systems Special Issue](https://www.sciencedirect.com/journal/journal-of-marine-systems/vol/76/issue/1)) .pull-left-40[ [Stow et al. 2009](https://www.sciencedirect.com/science/article/abs/pii/S0924796308001103?via%3Dihub)  ] .pull-right-60[ [Olsen et al. 2016](https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0146467)  ] ??? "Both our model predictions and the observations reside in a halo of uncertainty and the true state of the system is assumed to be unknown, but lie within the observational uncertainty (Fig. 1a). A model starts to have skill when the observational and predictive uncertainty halos overlap, in the ideal case the halos overlap completely (Fig. 1b). Thus, skill assessment requires a set of quantitative metrics and procedures for comparing model output with observational data in a manner appropriate to the particular application." --- ## Skill assessment with ecological interactions... fit criteria alone are not sufficient *Ignore predation at your peril: results from multispecies state-space modeling * [Trijoulet et al. 2020](https://besjournals.onlinelibrary.wiley.com/doi/full/10.1111/1365-2664.13515) >Ignoring trophic interactions that occur in marine ecosystems induces bias in stock assessment outputs and results in low model predictive ability with subsequently biased reference points. .pull-left-40[  EM1: multispecies state space EM2: multispecies, no process error EM3: single sp. state space, constant M EM4: single sp. state space, age-varying M *note difference in scale of bias for single species!* ] .pull-right-60[  ] ??? This is an important paper both because it demonstrates the importance of addressing strong species interactions, and it shows that measures of fit do not indicate good model predictive performance. Ignoring process error caused bias, but much smaller than ignoring species interactions. See also Vanessa's earlier paper evaluating diet data interactions with multispecies models --- ## Virtual worlds with adequate complexity: end-to-end ecosystem models Atlantis modeling framework: [Fulton et al. 2011](https://onlinelibrary.wiley.com/doi/full/10.1111/j.1467-2979.2011.00412.x), [Fulton and Smith 2004](https://www.ajol.info/index.php/ajms/article/view/33182) .pull-left[ **Norwegian-Barents Sea** [Hansen et al. 2016](https://www.imr.no/filarkiv/2016/04/fh-2-2016_noba_atlantis_model_til_web.pdf/nn-no), [2018](https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0210419)  ] .pull-right[ **California Current** [Marshall et al. 2017](https://onlinelibrary.wiley.com/doi/full/10.1111/gcb.13594), [Kaplan et al. 2017](https://www.sciencedirect.com/science/article/pii/S0304380016308262?via%3Dihub)  ] Building on global change projections: [Hodgson et al. 2018](https://www.sciencedirect.com/science/article/pii/S0304380018301856?via%3Dihub), [Olsen et al. 2018](https://www.frontiersin.org/articles/10.3389/fmars.2018.00064/full) ??? --- ## Design: Ecosystem model scenario (climate and fishing) <img src="EDAB_images/projectionOMsetup.png" width="85%" style="display: block; margin: auto;" /> ??? .pull-left[ * Recruitment variability in the operating model * Specify uncertainty in assessment inputs using `atlantisom` ] .pull-right[  ] --- ## Designing skill assessment with Atlantis .pull-left[  ] .pull-right[  ] --- ## Overview of `atlantisom` R package: [link](https://sgaichas.github.io/poseidon-dev/atlantisom_landingpage.html) .pull-left[ Started at the 2015 Atlantis Summit  ] .pull-right[  ] --- background-image: url("https://github.com/sgaichas/poseidon-dev/raw/master/docs/images/atlantisomDataFlow_truth-link.png") background-size: 850px background-position: right bottom ## `atlantisom` workflow: get "truth" <!----> * locate files * run `om_init` * select species * run `om_species` --- background-image: url("https://github.com/sgaichas/poseidon-dev/raw/master/docs/images/atlantisomDataFlow_data.png") background-size: 625px background-position: right top ## `atlantisom` workflow: get "data" <!----> * specify surveys + (can now have many, file for each) + area/time subsetting + efficiency (q) by species + selectivity by species + biological sample size + index cv + length at age cv + max size bin * specify survey diet sampling (new) + requires detaileddietcheck.txt + diet sampling parameters * specify fishery + area/time subsetting + biological sample size + catch cv * run `om_index` * run `om_comps` * run `om_diet` * environmental data functions too --- ## `atlantisom` outputs, survey biomass index, [link](https://sgaichas.github.io/poseidon-dev/msSurveysTest.html) .pull-left[ Perfect information (one Season)  ] .pull-right[ Survey with catchability and selectivity  ] --- ## `atlantisom` outputs, age and length compositions, [link](https://sgaichas.github.io/poseidon-dev/msSurveysTest.html) .pull-left[ .center[   ] ] .pull-right[ .center[   ] ] --- ## `atlantisom` outputs, diet compositions, [link](https://sgaichas.github.io/poseidon-dev/SurveyDietCompTest.html) .pull-left[  ] .pull-right[  ] --- ## Testing a simple "sardine" assessment, CC Atlantis [Kaplan et al. 2021](https://www.frontiersin.org/articles/10.3389/fmars.2021.624355/full) .pull-left[  ] .pull-right[ Will revisit with newer CC model; issues with different growth than assumed in SS setup?  ] --- ## Cod assessment based on NOBA Atlantis (Li, WIP) https://github.com/Bai-Li-NOAA/poseidon-dev/blob/nobacod/NOBA_cod_files/README.MD .pull-left[ Conversion from SAM to SS successful  ] .pull-right[ Fitting to NOBA data more problematic  ] --- ## Multispecies assessment based on NOBA Atlantis (Townsend et al, WIP) .pull-left[ Stepwise development process of self fitting, fitting to atlantis output, then skill assessment using atlantis output  Profiles for estimated parameters; but *what to compare K values to?*  ] .pull-right[ Can test model diagnostic tools as well  Using simulated data in [`mskeyrun` package](https://noaa-edab.github.io/ms-keyrun/), available to all ] --- ## P.S. What else could we test? .center[  ] .footnote[ https://xkcd.com/1885/ ] --- ## Multispecies production model ensemble assessment--use Atlantis instead .pull-left[  ] .pull-right[  ] --- background-image: url("https://github.com/NOAA-EDAB/presentations/raw/master/docs/EDAB_images/scenario_4.png") background-size: 500px background-position: right ## Difficulties so far .pull-left-60[ Atlantis related * Understanding Atlantis outputs (much improved with documentation since 2015) * Reconciling different Atlantis outputs, which to use? * Calculations correct? attempted M, per capita consumption Skill assessment related * Running stock assessment models is difficult to automate. A lot of decisions are made by iterative running and diagnostic checks. * Generating input parameters for models that are consistent with Atlantis can be time consuming (Atlantis is a little too realistic...) + Fit vonB length at age models to atlantisom output for input to length based model, not all converge (!) + What is M (see above) ] .pull-right-40[ .cemter[ .footnote[ https://xkcd.com/2289/ ] ] ] ??? + `atlantisom` is using outputs not often used in other applications + I don't run Atlantis so putting print statements in code not an option + could be more efficient with targeted group work + should we expect numbers in one output to match those in others? + diet comp from detailed file matches diet comp in simpler output + catch in numbers not always matching between standard and annual age outputs + YOY output + ... others that have been encountered + estimating per capita consumption from detaileddiet.txt results in lower numbers than expected + still can't get reasonable mortality estimates from outputs--understand this is an issue --- ## Atlantis and assessment model skill assessment: Thank you and Discussion Fisheries stock assessment and ecosystem modeling continue to develop. .bluetext[Can we build assessments to keep pace with climate?] Interested in your thoughts: * Is this a good use of Atlantis model outputs? * Any obvious errors in `atlantisom` setup? * How to improve `atlantisom`? + Functions to write specific model inputs in progress (SS included, more complex models in own packages/repositories) + New package with skill assessment functions? + Update documentation and vingnettes + Integrate/update with other R Atlantis tools + Other? * What are best practices to use Atlantis model outputs in skill assessment? + Spatial and temporal scale that is most appropriate + Calculating assessment model input parameters from Atlantis outputs + Storing Atlantis outputs for others to use (large file size) + Other? .footnote[ Slides available at https://noaa-edab.github.io/presentations Contact: <Sarah.Gaichas@noaa.gov> ]